APE Dataset

Dataset for Unconstrainted 3D Human Pose Estimation

Download the APE Dataset (3.5 GB) Download the Matlab scripts (1.4 KB)Introduction

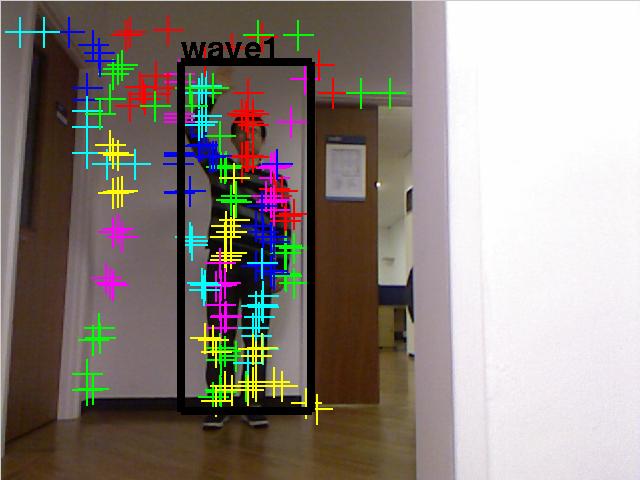

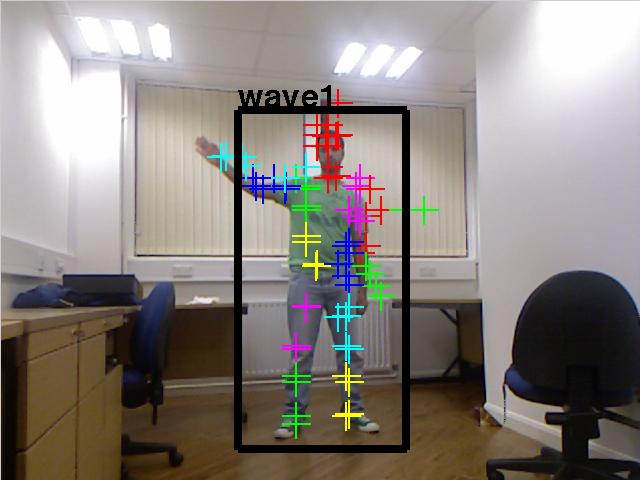

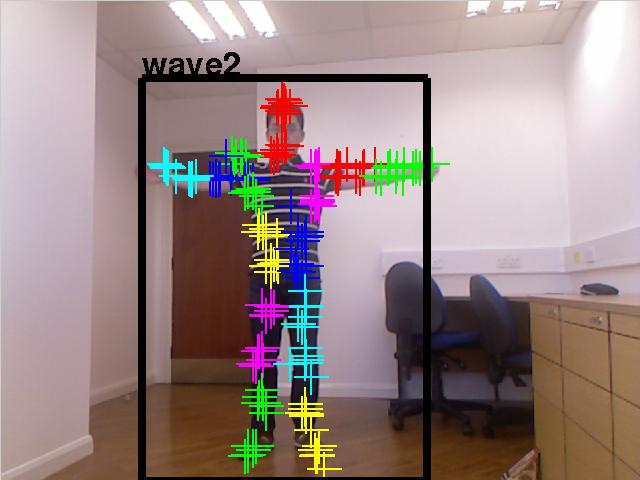

The Cambridge-Imperial APE (Action-Pose-Estimation) dataset is collected for 3D human pose estimation. Different from other public datasets, the APE dataset provides multimodal data:

- RGB Images

- Depth-maps

- 3D human pose

- Human action label

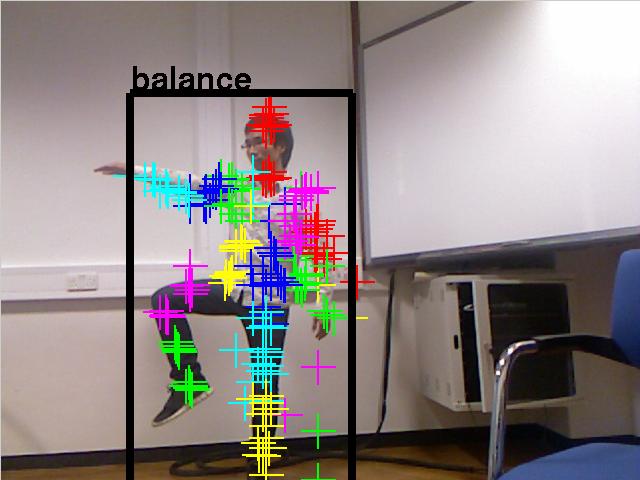

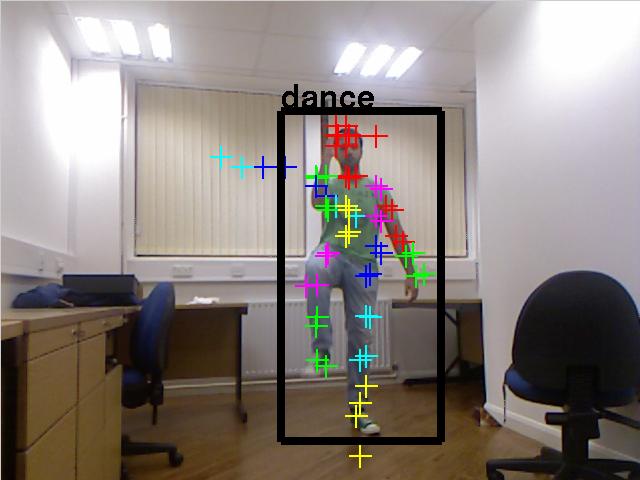

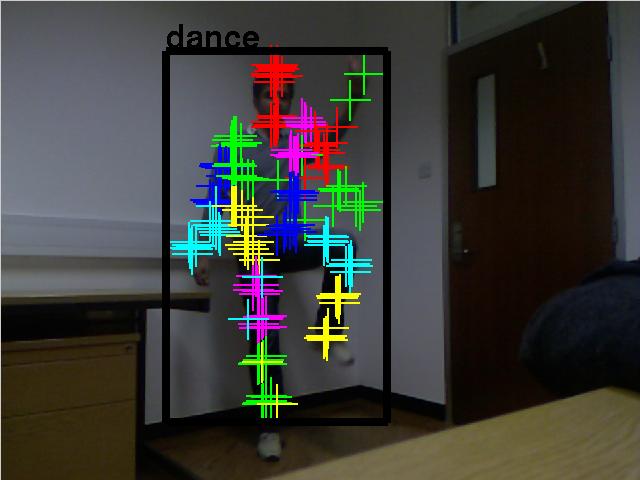

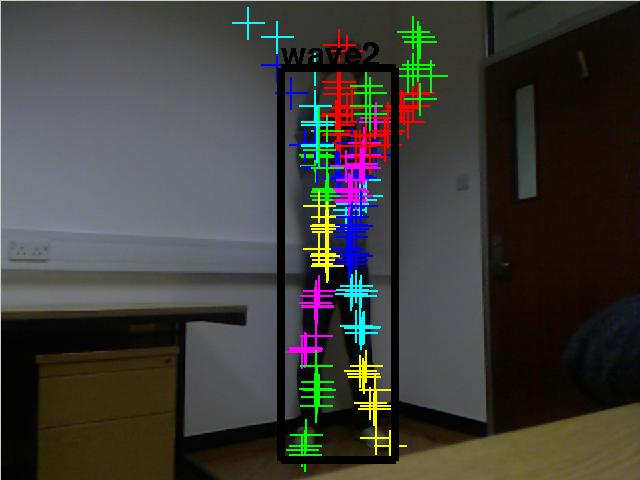

The APE dataset contains 245 sequences from 7 subjsects performing 7 different categories of actions. Videos of each subject were recorded in different unconstrained environments, changing camera poses and moving background objects.

The settings is considered challenging for traditional 3D human pose estimation because:

- no scene-dependent cues, such as foreground segmentation, can be used

- testing is done in completely unseen environments.

Download

Download the APE Dataset (3.5 GB) Download the Matlab scripts (1.4 KB) In citing the APE dataset, please refer to:

Unconstrained Monocular 3D Human Pose Estimation by Action Detection and Cross-modality Regression Forest

Tsz-Ho Yu, Tae-Kyun Kim, Roberto Cipolla

In proceedings of 26th IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, 2013

[IEEE Xplore Website]

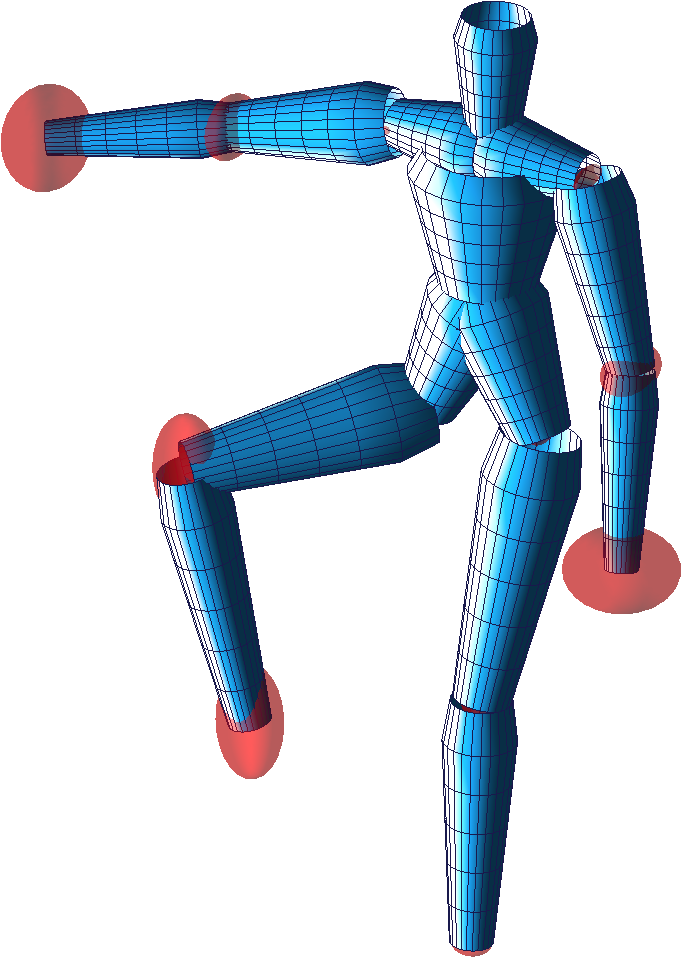

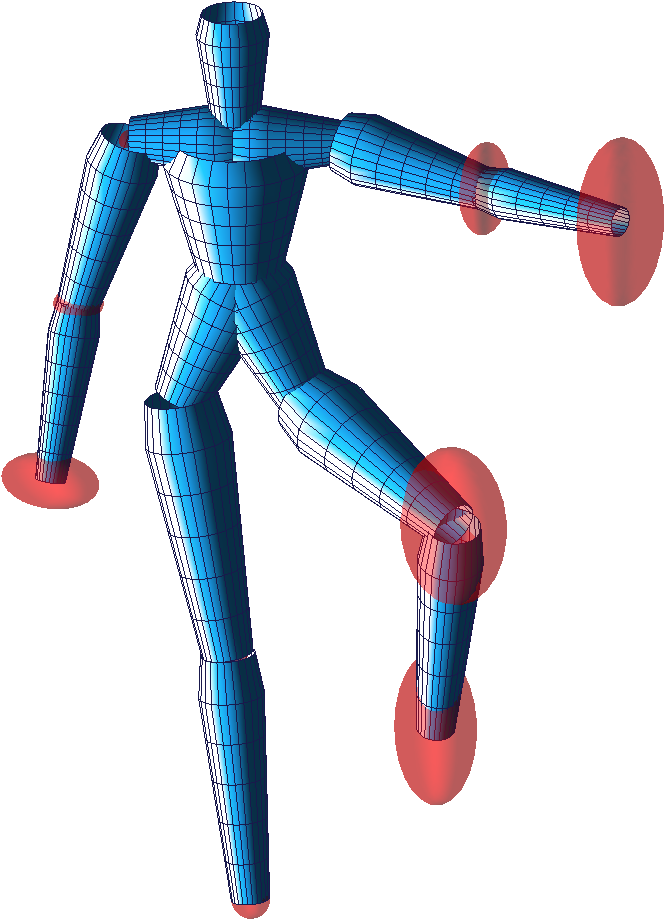

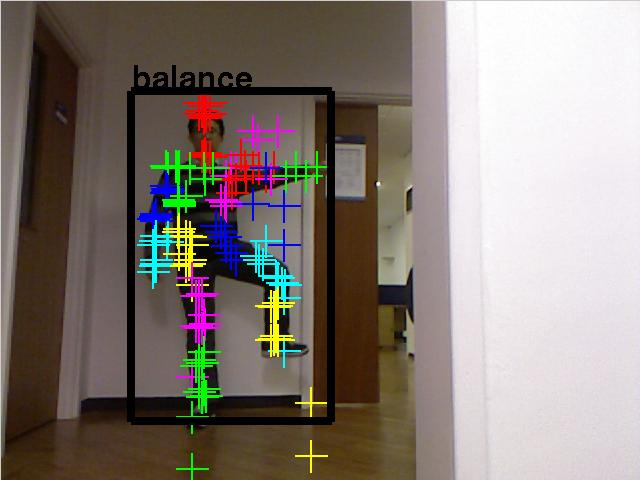

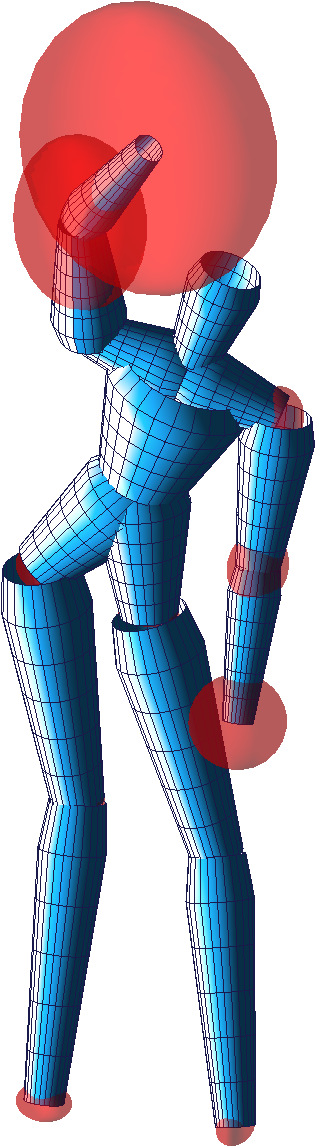

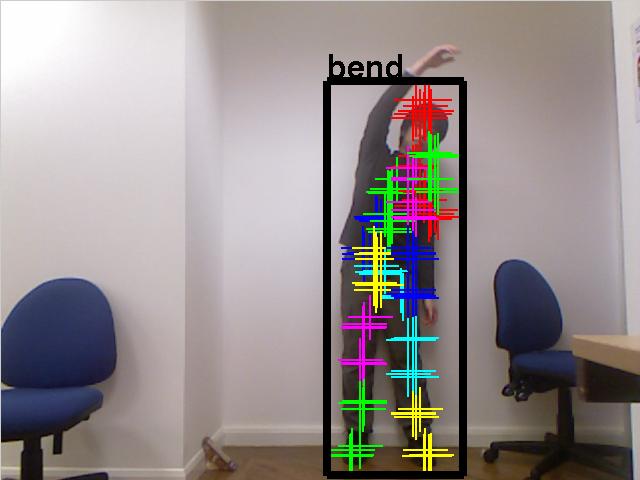

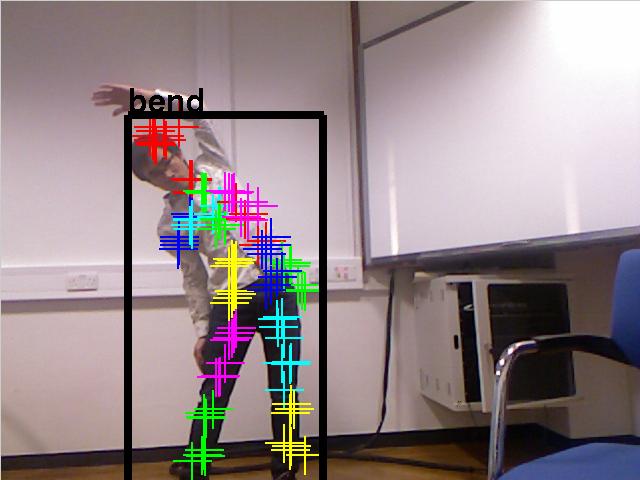

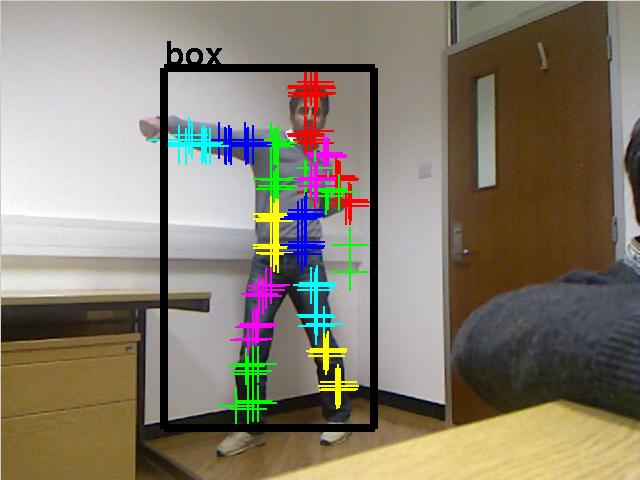

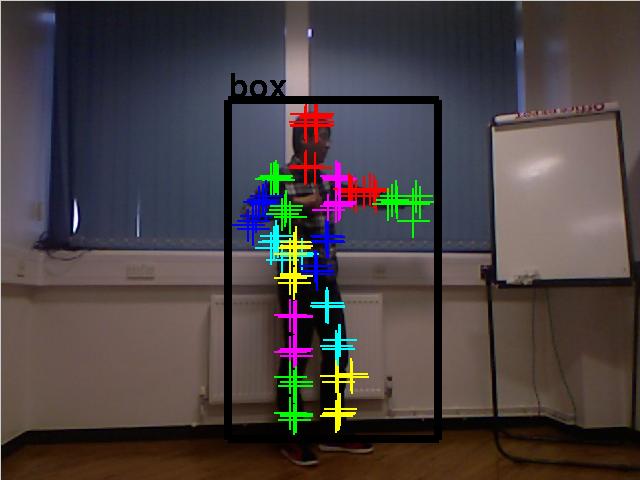

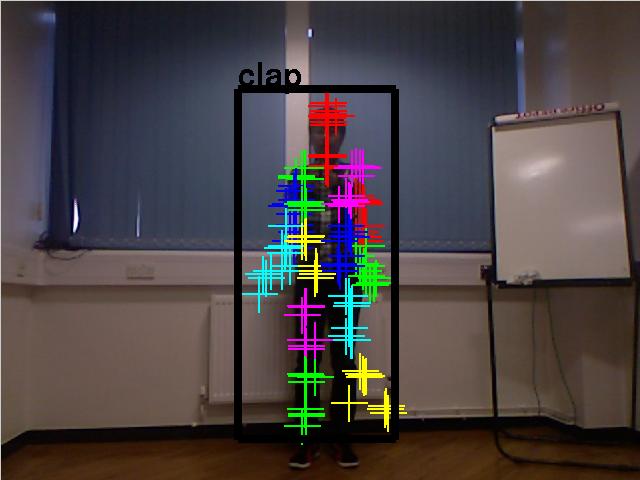

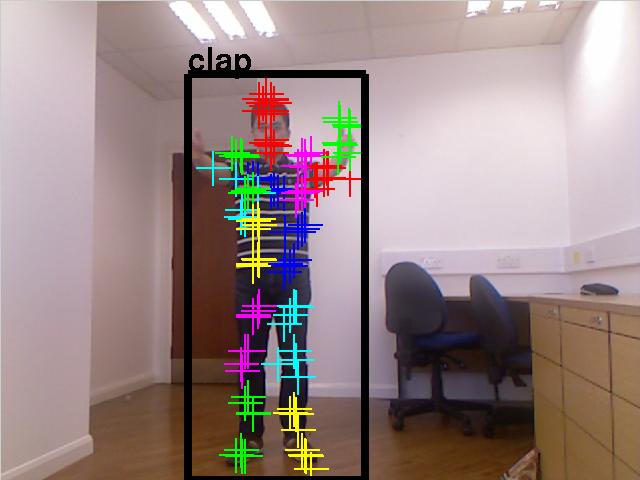

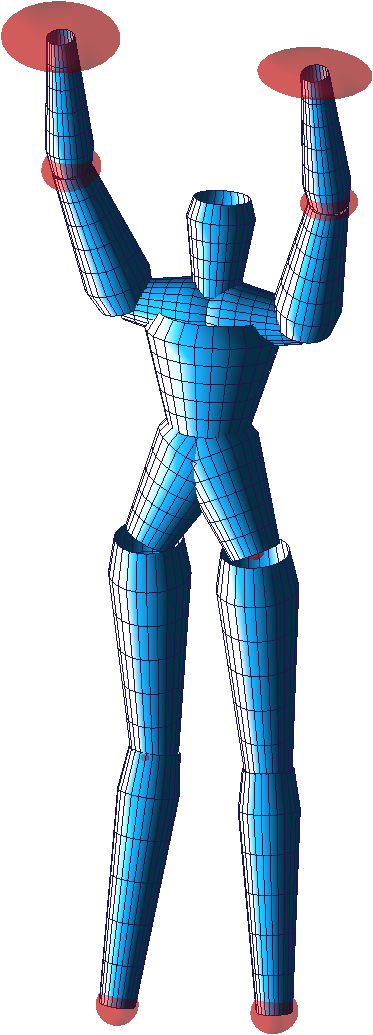

Action Classes

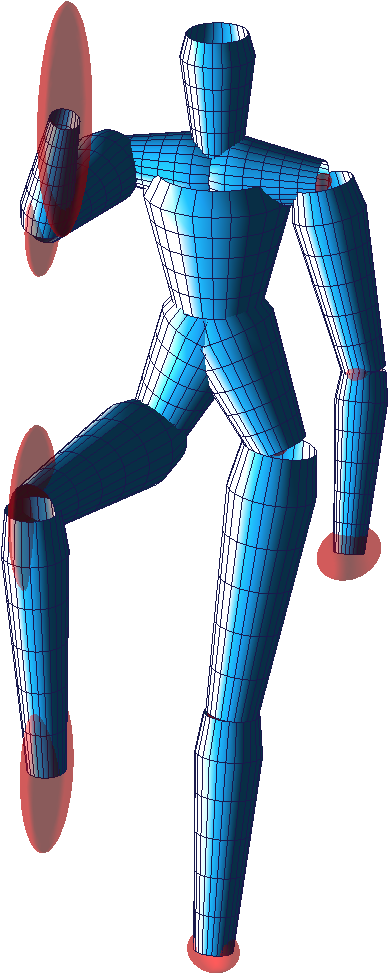

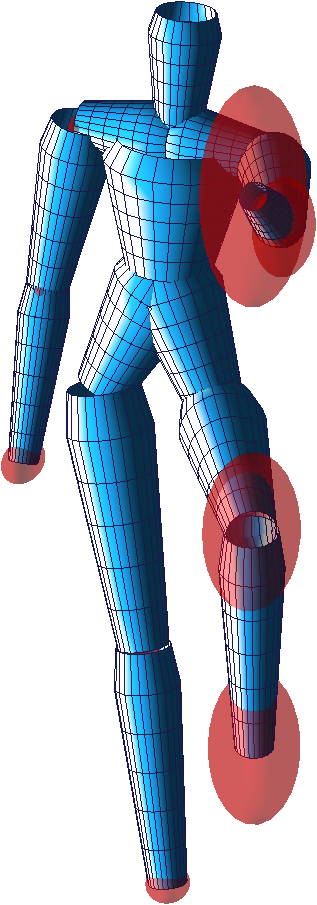

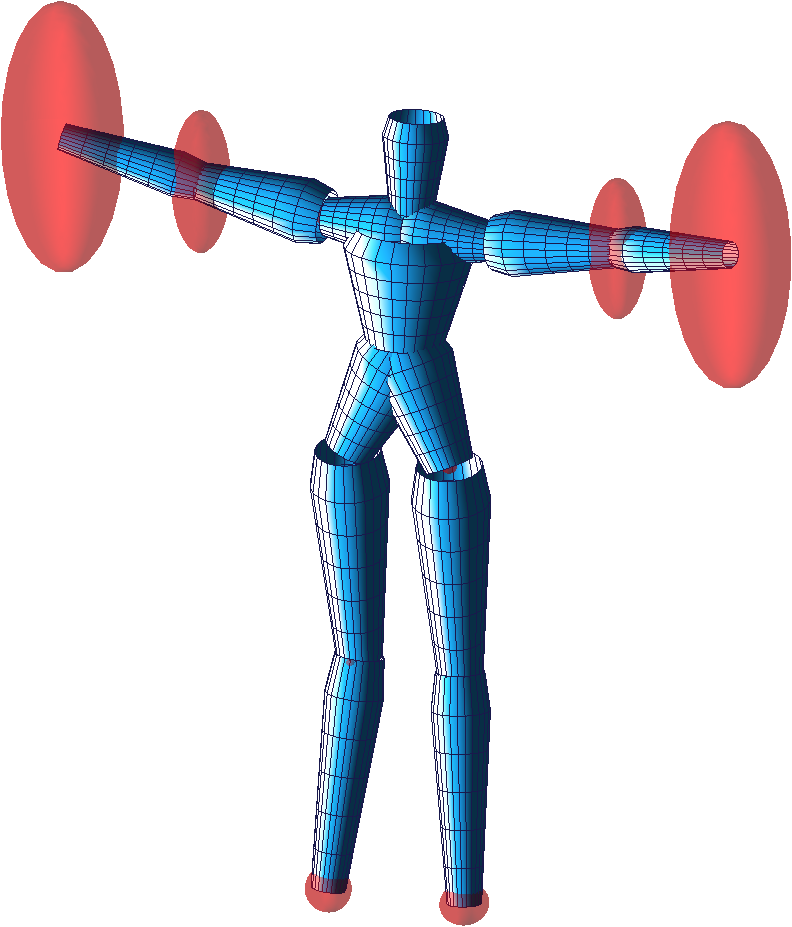

The seven action classes of the APE dataset are illustrated as follows:

Format

Action Index File

The action label of each sequence is stored in activityLabel.txt, which assigns an action class of each folder.

Image Data

The APE dataset provides Both RGB images and depth-maps of the video sequences. They are stored in folders indicated by the action class and subject, e.g. clap04 is the folder that contains the "clapping" sequences performed by subject 4.

Inside each sequence folder, RGB frames and depth-maps are named as "imageX.jpg" and "depthX.jpg" respectively, where X is the frame index. For example, depth00567.jpg is the depth-map for frame 568.

3D Pose Data

3D pose data are stored in text files indicated by its corresponding folder, e.g. clap04.txt contains the 3D pose data for the clapping action performed by subject 4, corresponding to folder "clap04".

The format of the pose file is described below:

[N (# of frame)]

[No use] [No use] [joint 1] [joint 2] ... [joint 15]

.

.

(N lines)

.

.

[No use] [No use] [joint 1] [joint 2] ... [joint 15]

The first line contains the number of frame N in the video sequence, which is followed by N lines describing the 3D pose of the subject in each frame. The first two numbers in each line are place holders and not used at this moment, then each joint is represetned by a 14 numbers:

[joint 1] := [X] [Y] [Z] [location_conf] [R00] [R01] [R02] [R10] [R11] [R12] [R20] [R21] [R22] [rotation_conf]

[X,Y,Z] is the 3D spatial location of the joint. [location_conf] indicates the confidence of its location, a joint is not seen when its confidence is zero (occlusions, missing from data, etc.). The orientation of teh joint is represented by a 3x3 rotation matrix with elements [R00 to R22], there rotation-conf is the confidence of the joint's orientation.

Matlab Scripts

Please use thyReadData.m to load the APE dataset to Matlab. thyReadSkel.m is used to read the 3D pose data file, and rot2euler is used to convert rotation matrices to Euler angles.License

The APE dataset is released under the MIT license.

Contact

For any inquiries or feedback regarding the APE dataset, please contact:

- Tsz-Ho Yu [E-Mail] [Homepage]

- Tae-Kyun Kim [E-Mail] [Homepage]